Using a multi-dimensional block means that you have to be careful about distributing this number of threads among all the dimensions. For instance, if we have a grid dimension of blocksPerGrid = (512, 1, 1), blockIdx.x will range between 0 and 511.Īs I mentioned here the total amount of threads in a single block cannot exceed 1024. They are indexed as normal vectors in C++, so between 0 and the maximum number minus 1. In CUDA, blockIdx, blockDim and threadIdx are built-in functions with members x, y and z.

#Cuda vector add dim3 code#

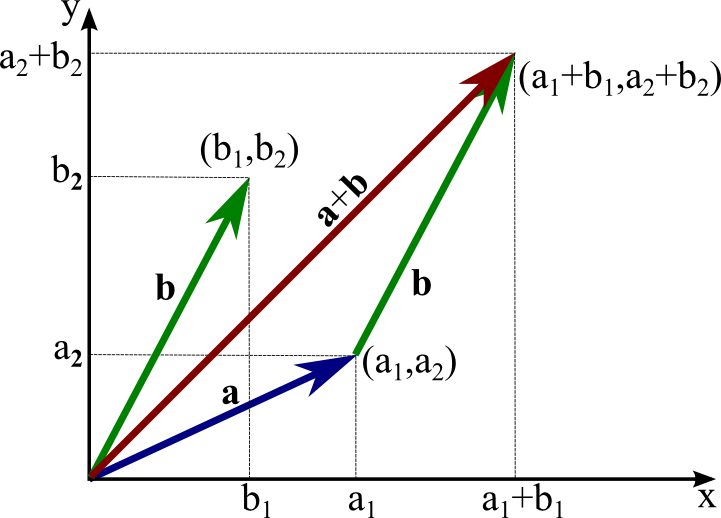

Int col = blockIdx.x * blockDim.x + threadIdx.x Īs you can see, it's similar code for both of them. Let's have a look at the code: int row = blockIdx.y * blockDim.y + threadIdx.y Indeed, in this way we can refer to both the $x$ and $y$ axis in the very same way we followed in previous examples. This is very useful, and sometimes essential, to make the threads work properly. This is because, if you only give a number to the kernel call as we did, it is assumed that you created a dim3 mono-dimensional variable, implying $y=1$ and $z=1$.Īs we are dealing with matrices now, we want to specify a second dimension (and, again, we can omit the third one). In the previous articles you didn't see anything like that, as we only discussed 1D examples, in which we didn't have to specify the other dimensions. This is evident when we define them before calling a kernel, with something like this: dim3 blocksPerGrid(512, 1, 1) In fact, grids and blocks are 3D arrays of blocks and threads, respectively. When we call a kernel using the instruction > we automatically define a dim3 type variable defining the number of blocks per grid and threads per block. Grids And BlocksĪfter the previous articles, we now have a basic knowledge of CUDA thread organisation, so that we can better examine the structure of grids and blocks. The goal is to add new concepts throughout this article, ending up with a 2D kernel, which uses shared memory to efficiently optimise operations. We will see different ways of achieving this. We have to compute every element in $C$, and each of them is independent from the others, so we can efficiently parallelise. It should be pretty clear now why matrix-matrix multiplication is a good example for parallel computation. The following figure intuitively explains this idea:

That is, in the cell $i$,$j$ of $M$ we have the sum of the element-wise multiplication of all the numbers in the $i$-th row in $A$ and the $j$-th column in $B$. The number inside it after the operation $M=A*B$ is the sum of all the element-wise multiplications of the numbers in $A$, row 1, with the numbers in $B$, column 1. Let's take the cell $1$,$1$ (first row, first column) of $M$. Why does this happen and how does it work? The answer is the same for both questions here. That is, the number of rows in the resulting matrix equals the number of rows of the first matrix $A$ and the number of columns of the second matrix $B$. The result of the multiplication $A*B$ (which is different from $B*A$!) is a $n \times w$ matrix, which we call $M$.

Also assume that $B$ is a $m \times w$ matrix. Assume that $A$ is a $n \times m$ matrix, which means that it has $n$ rows and $m$ columns. Let's say we have two matrices, $A$ and $B$. Matrix-Matrix Multiplicationīefore starting, it is helpful to briefly recap how a matrix-matrix multiplication is computed.

#Cuda vector add dim3 free#

Also, if you have any doubt, feel free to ask me for help in the comment section. But don't worry, at the end of the article you can find the complete code. everything not relevant to our discussion). So far you should have read my other articles about starting with CUDA, so I will not explain the "routine" part of the code (i.e. In this article we will use a matrix-matrix multiplication as our main guide.

#Cuda vector add dim3 how to#

option pricing under a binomial model and using finite difference methods (FDM) for solving PDEs.Īs usual, we will learn how to deal with those subjects in CUDA by coding. In subsequent articles I will introduce multi-dimensional thread blocks and shared memory, which will be extremely helpful for several aspects of computational finance, e.g. Today, we take a step back from finance to introduce a couple of essential topics, which will help us to write more advanced (and efficient!) programs in the future. In the previous article we discussed Monte Carlo methods and their implementation in CUDA, focusing on option pricing.

0 kommentar(er)

0 kommentar(er)